The Long Quest for Knowledge Graphs

In the buzzing world of data architectures one term seems to unite some previously contending buzzy paradigms. That term is “Knowledge Graphs”.

The term was coined as early as 1972 by the Austrian linguist Edgar W. Schneider, in a discussion of how to build modular instructional systems for courses. So, it is a European idea. It took many years for it to get a real breakthrough. That happened in the US: In 2012, Google posted a blog post called "Introducing Knowledge Graphs: Things, not Strings". Googles knowledge graph was, initially, partially built on top of DBPedia and Freebase and was amended by impressive amounts of information from many other sources. Most tech companies joined the movement, incl. Facebook, LinkedIn, Airbnb, Microsoft, Amazon, Uber and eBay to mention a few.

The term was coined as early as 1972 by the Austrian linguist Edgar W. Schneider, in a discussion of how to build modular instructional systems for courses. So, it is a European idea. It took many years for it to get a real breakthrough. That happened in the US: In 2012, Google posted a blog post called "Introducing Knowledge Graphs: Things, not Strings". Googles knowledge graph was, initially, partially built on top of DBPedia and Freebase and was amended by impressive amounts of information from many other sources. Most tech companies joined the movement, incl. Facebook, LinkedIn, Airbnb, Microsoft, Amazon, Uber and eBay to mention a few.

Two Technologies are Used in Knowldge Graphs

Knowledge management technologies came out of the semantic web community based on concepts such as RDF (Resource Definition Framework, a stack for defining semantic databases, taxonomies and ontologies), open world assumptions, linked open data (on the web) and semantics with inferencing. That movement started in 1999, but the semantic industry struggled to get market attention. Many of the supporters said that “we need a killer application!”. Well, Knowledge Graphs is just that. Congratulations!

The other technology category is Property Graphs, which most of this website is all about.

Can you build semantic networks in property graphs? Yes, you can! Look up, for example, Neo4js Neosemantics, and you will see that interoperability is indeed very real:

neosemantics (n10s) is a plugin that enables the use of RDF and its associated vocabularies like (OWL,RDFS,SKOS and others) in Neo4j. RDF is a W3C standard model for data interchange. You can use n10s to build integrations with RDF-generating / RDF-consuming components. You can also use it to validate your graph against constraints expressed in SHACL or to run basic inferencing.

Property graphs have an easy learning curve, whereas RDF stores have a steep learning curve. In my opinion, 80 % of ordinary, daily knowledge graph activities are easily (ie. quickly) solved in property graph – with good quality. Querying property graphs is also considerably easier and the choice of really nice graph browsers is overwhelming. Finally, property graphs are highly performant in highly connected graphs with many nodes and even more relationships.

The other technology category is Property Graphs, which most of this website is all about.

Can you build semantic networks in property graphs? Yes, you can! Look up, for example, Neo4js Neosemantics, and you will see that interoperability is indeed very real:

neosemantics (n10s) is a plugin that enables the use of RDF and its associated vocabularies like (OWL,RDFS,SKOS and others) in Neo4j. RDF is a W3C standard model for data interchange. You can use n10s to build integrations with RDF-generating / RDF-consuming components. You can also use it to validate your graph against constraints expressed in SHACL or to run basic inferencing.

Property graphs have an easy learning curve, whereas RDF stores have a steep learning curve. In my opinion, 80 % of ordinary, daily knowledge graph activities are easily (ie. quickly) solved in property graph – with good quality. Querying property graphs is also considerably easier and the choice of really nice graph browsers is overwhelming. Finally, property graphs are highly performant in highly connected graphs with many nodes and even more relationships.

The Metadata and the Content are Related and Evolve Together

Graphs (both RDF and most property graphs) are based on the so-called open world assumption, which leads to benefits such as:

• “Schema-less” development. Meaning that no schema is necessary for loading data.

• Inspections, using graph queries, of the data contents lead to – over some iterations, probably, a better understanding of the data model, sort of a prototyping approach to data modeling.

• The structures of the graph data model might be iteratively changed (no schema to change).

• A canonical form of the inner graph structure is easy to derive (inside your head) from the graph elements, including edges / relationships and the structures they represent. The canonical form can remain the same, even after structural changes such as rearranging the allocation of properties to nodes and edges / relationships are performed.

• It is all about the distance between the logical data model and a corresponding conceptual data model – which in graph models is easy to grasp, even without visualization. This makes graph data models both more robust and more flexible.

It is rather obvious that the metadata and the content evolve, together! And in our times these changes are blindingly fast. If you must keep up without to costly re-reengineering efforts, you must deal with changes in metadata and in business data, as they occur. You are streaming in facts, which are morphing as you look. Obviously, you must deal with:

• Dynamics are value-driven

• Impact analytics after the fact

• Integrations and lineages

• Discovery (the graph way)

• Dependencies not linear

• Outcomes and usages

• The web is a graph

• Your mesh (web) is a graph

• Know Your Space(s)!

So, changes occur daily, i.e.. you must keep track of them in your knowledge graph!

You can do that by

Building it in property graph technology, which has an easier learning curve than RDF.

You could use your own Knowledge Graph as an important part of the data contract with the business (make requirements machine readable).

You can use your Knowledge Graph to make completeness tests as well as look for accountability features, missing information, (lack of) temporality information and so forth.

You can use a graph prototype as a test and verification platform for the businesspeople.

• “Schema-less” development. Meaning that no schema is necessary for loading data.

• Inspections, using graph queries, of the data contents lead to – over some iterations, probably, a better understanding of the data model, sort of a prototyping approach to data modeling.

• The structures of the graph data model might be iteratively changed (no schema to change).

• A canonical form of the inner graph structure is easy to derive (inside your head) from the graph elements, including edges / relationships and the structures they represent. The canonical form can remain the same, even after structural changes such as rearranging the allocation of properties to nodes and edges / relationships are performed.

• It is all about the distance between the logical data model and a corresponding conceptual data model – which in graph models is easy to grasp, even without visualization. This makes graph data models both more robust and more flexible.

It is rather obvious that the metadata and the content evolve, together! And in our times these changes are blindingly fast. If you must keep up without to costly re-reengineering efforts, you must deal with changes in metadata and in business data, as they occur. You are streaming in facts, which are morphing as you look. Obviously, you must deal with:

• Dynamics are value-driven

• Impact analytics after the fact

• Integrations and lineages

• Discovery (the graph way)

• Dependencies not linear

• Outcomes and usages

• The web is a graph

• Your mesh (web) is a graph

• Know Your Space(s)!

So, changes occur daily, i.e.. you must keep track of them in your knowledge graph!

You can do that by

- Leveraging API’s to semantic media such as Google, Apple, Microsoft etc. and / or

- Take advantage of open semantic sources such as

- Wikidata

- Industry standard ontologies

- International and national standard ontologies

- Other more or less open sources such as Opencorporates and more

Building it in property graph technology, which has an easier learning curve than RDF.

You could use your own Knowledge Graph as an important part of the data contract with the business (make requirements machine readable).

You can use your Knowledge Graph to make completeness tests as well as look for accountability features, missing information, (lack of) temporality information and so forth.

You can use a graph prototype as a test and verification platform for the businesspeople.

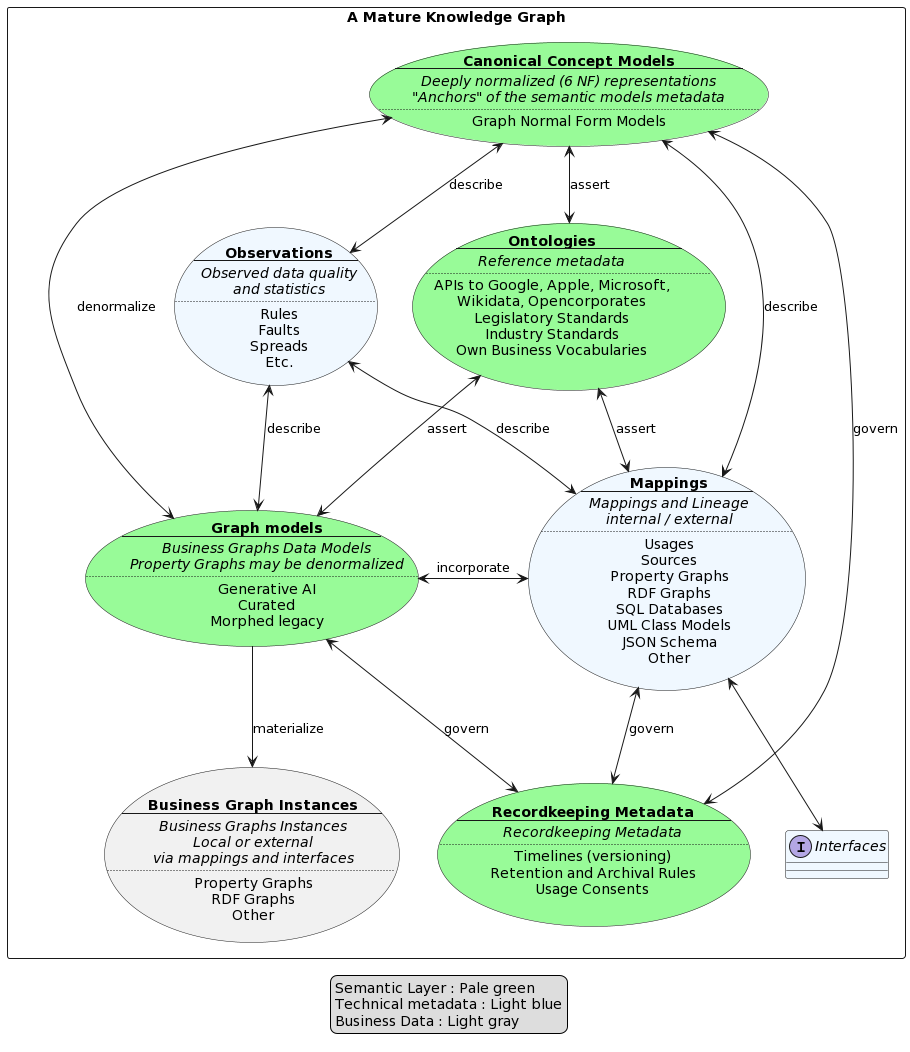

What does my Knowledge Graph Look Like?

Well, we must build something that:

- Combines data and metadata

- Has a canonical concept model in its core

- Can work with ontologies etc. from the RDF world

- Handles various kinds of graph models

- Handles recordkeeping, including

- timeline-based versioning

- retention and archival

- usage consents

- Handles mappings and observations about data quality, lineage, sources, interfaces etc.

- And, Ooops I almost forgot:

- Has fresh operational and historical data as graphs, maybe also property graph views on top of SQL databases.

There are 3 purpose-oriented “subgraphs”:

- The Semantic Layer (Model oriented metadata), the schema information graph

- Technical metadata describing data characteristics such as mappings, lineage, physical stores as well as data quality issues

- Business Graph Instances – the real, operational data included in the Knowledge Graph; be it physically or via mappings (such as SQL-PGQ) to external databases.

One thing that is immediately obvious is that the combination of metadata and data creates a complex, highly connected graph. In other words, you need a property graph for dealing with it, and you all the help that you can get for maintaining the semantics and the relationships on the fly. Generative AI (though it must be curated) is certainly on the roadmap for assistance with data modelling, and data models as code are also a necessity.

Also note that “Ontologies” is meant in a broad sense; you might want to include information available via API’s from external knowledge graphs or search interfaces maintained by Google, Apple, Microsoft, Wikidata or even the EU Knowledge Graph. Not to mention generative AI services.

- The Semantic Layer (Model oriented metadata), the schema information graph

- Technical metadata describing data characteristics such as mappings, lineage, physical stores as well as data quality issues

- Business Graph Instances – the real, operational data included in the Knowledge Graph; be it physically or via mappings (such as SQL-PGQ) to external databases.

One thing that is immediately obvious is that the combination of metadata and data creates a complex, highly connected graph. In other words, you need a property graph for dealing with it, and you all the help that you can get for maintaining the semantics and the relationships on the fly. Generative AI (though it must be curated) is certainly on the roadmap for assistance with data modelling, and data models as code are also a necessity.

Also note that “Ontologies” is meant in a broad sense; you might want to include information available via API’s from external knowledge graphs or search interfaces maintained by Google, Apple, Microsoft, Wikidata or even the EU Knowledge Graph. Not to mention generative AI services.

You may follow the sequence or explore the site as you wish:

The guy behind this site is Thomas Frisendal: